Advances in ground robots, small drones, artificial intelligence, machine learning and data processing are being combined in an effort to help soldiers “see” the battlefield in ways hardly imagined until recently.

Four Army Research Lab projects at various levels of development aim to improve soldiers’ situational awareness by enabling them to spot roadside bombs, track targets, distinguish people in crowds and even merge their own human vision with computer vision for a full-scope view of the tactical edge of combat.

Dr. Alexander Kott, ARL’s chief scientist, spoke with Army Times about work that the lab and its partner researchers are doing in the area.

RELATED

Kott looked to history to help contextualize what’s been happening in recent years and what’s still to come in terms of melding new technologies to provide soldiers a new vision for the battlespace.

Field telescopes or spyglasses dramatically increased the ability of ground warfare officers to conduct maneuvers and command forces in the 1600s.

Combinations of mathematics, aerial observations and communications technologies allowed artillery forces in World War I to strike at unseen targets. Beginning in the early 1940s, during World War II, nascent night vision technology pioneered the capabilities of forces to fight effectively in the dark.

Kott said the new ways in which scientists are combing technologies also signifies an historic change.

“We are in the midst of a revolution in how the battlefield is being perceived,” Kott said.

A lot of that is happening because wearable technologies, from goggles to body-worn sensors, are only now becoming feasible to use effectively.

One project already in the works is an “object detection module” that allows the soldier at the tactical level to use on-hand small drones to process data that would help with in-vehicle target detection and threat prioritization.

That kind of foundational ability has already been used in various Army Combat Capabilities Development Command programs. For example, small drone tactical intelligence in the ATLAS program provides soldiers with faster target identification, acquisition and engagement than manual methods. And the object detection technology within the Artificial Intelligence for Maneuver & Mobility program aids autonomous robot navigation, according to an ARL statement.

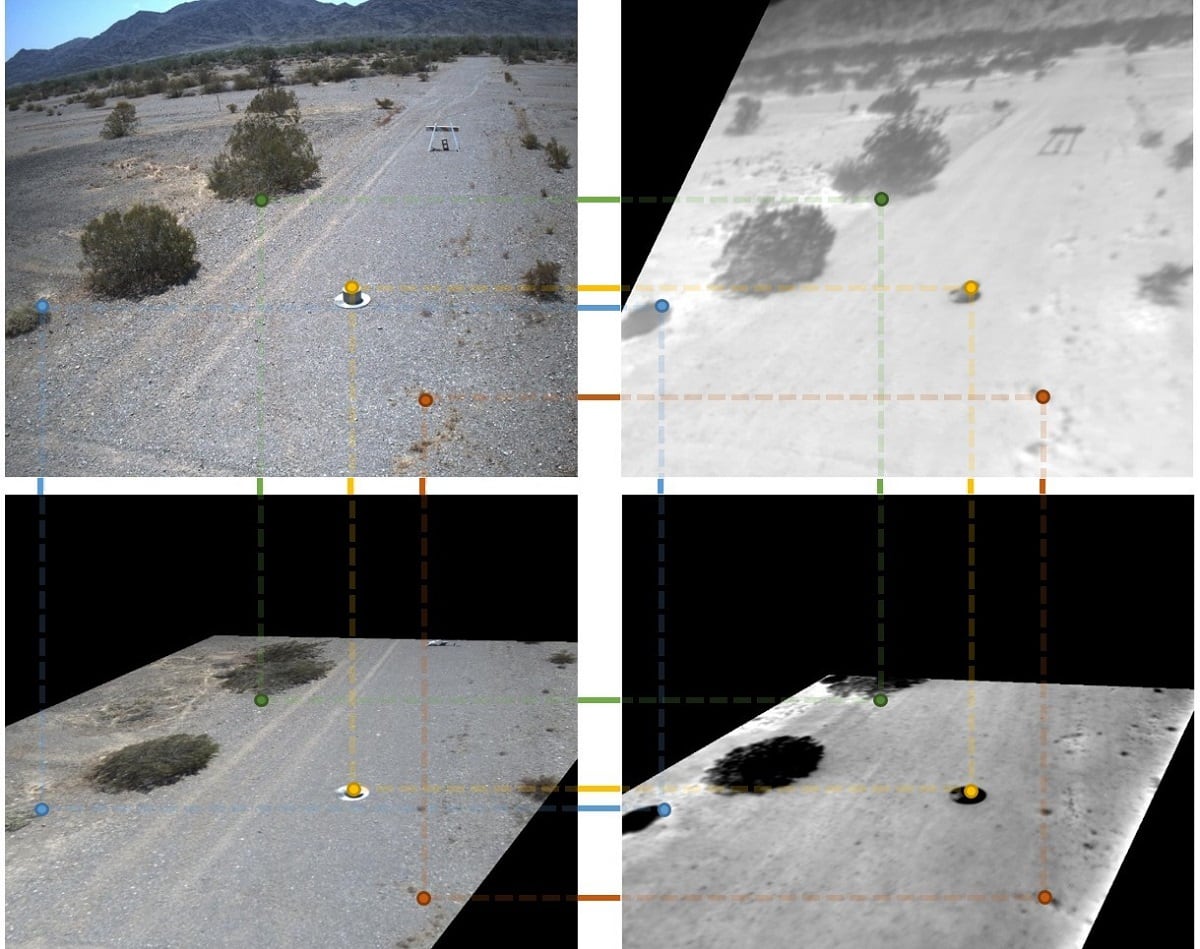

Another example is a project announced earlier this year that is not a single device but a drone-based multi-sensor system that allows for standoff detection of explosive hazards.

Scientists have connected a variety of drone-based sensors with machine learning algorithms to detect the variety of explosive emplacements used against vehicle convoys.

An early phase of the program used airborne synthetic aperture radar, ground vehicular and drone LIDAR, high-definition electro-optical cameras, long-wave infrared cameras and non-linear junction detection radar.

The test came through a 7km track that had 625 emplacements, which included explosive hazards, simulated clutter and calibration targets.

That dense and complex terrain was used to gather terabytes of data. The data was then used to train the AI/machine learning algorithms so that they could do autonomous target detection specific to each sensor.

The next steps will pull together the research, enabling real-time automatic target detection that is then displayed to the user with an augmented reality engine. That way, soldiers can see the threats best identified by the system while on the move.

It’s one thing for a drone to provide data to a machine that then identifies the subject. It’s quite another to provide a human user a full picture of the battlefield all around them.

The Tactical Awareness via Collective Knowledge, or TACK, program is combining human and computer vision at the squad level.

TACK tracks the user’s eye movements, fatigue and gaze. That way, if a soldier looks to a point of interest, the device begins translating what they are seeing or even sending a companion drone or other sensor to check out what is in their view.

This is being applied already within the Integrated Visual Augmentation System, a multi-function, mixed-reality goggle. Microsoft was recently awarded a nearly $22 billion, 10-year contract to produce the device.

Kott pointed to the IVAS as a prime example of how a variety of sensors, AI and machine learning can meld into a system that not only feeds data to the user but also helps translate the data to a human-friendly form and can be shared across platforms for a fuller picture of what’s happening at multiple levels of combat.

TACK is already being used but is expected to evolve significantly over the coming decades. Right now, the configuration is being tested to help soldier with decision making.

By 2025, developers hope that TACK can help command-level decision makers, in part by using sensing to assess squad situational awareness and share that across echelons.

From 2035 to 2050, they aim to modify systems on the fly, enable faster decision making, reduce soldier risk and provide greater battlefield intelligence and adaptability.

One of the early development projects showing promise is finding new ways for computers to take degraded visuals — from limited resolution images to motion blurred video or from poor visibility due to rain, fog or dust storms — and make that video usable for analysis or viewing without requiring large amounts of computer power only available far from the battlefield.

That’s especially important for target tracking and identification.

The novel approach uses existing visual similarity between objects, which helps match up and clear up the degraded video quicker without requiring a top-to-bottom rebuilding of the image.

This works by “teaching” the algorithm what to look for so it can better identify what’s in the blurred image.

“Our scientists discovered you can teach a computer with deep neural networks, you can actually teach them how to find things in a very blurred picture without turning it into a good picture, much cheaper in computer power,” Kott said. “(The computer) can “see the tank” even if you have a very poor picture in a dust storm.”

As that method becomes refined, the next step is to get it onto very small platforms such as small drones and ground robots, he said.

Todd South has written about crime, courts, government and the military for multiple publications since 2004 and was named a 2014 Pulitzer finalist for a co-written project on witness intimidation. Todd is a Marine veteran of the Iraq War.